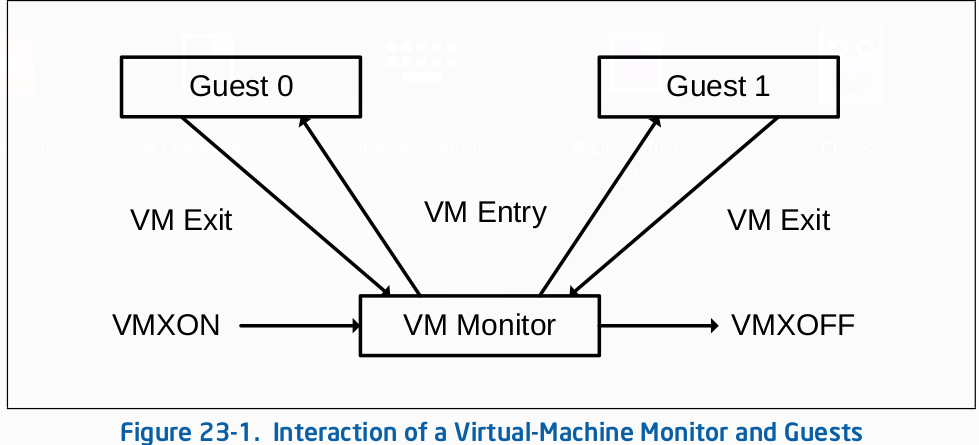

In the previous part of the series we have talked about basics of Intel's virtualization support and also learn to write a basic linux kernel module. Moving forward, now we will start our hypervisor development from this part.

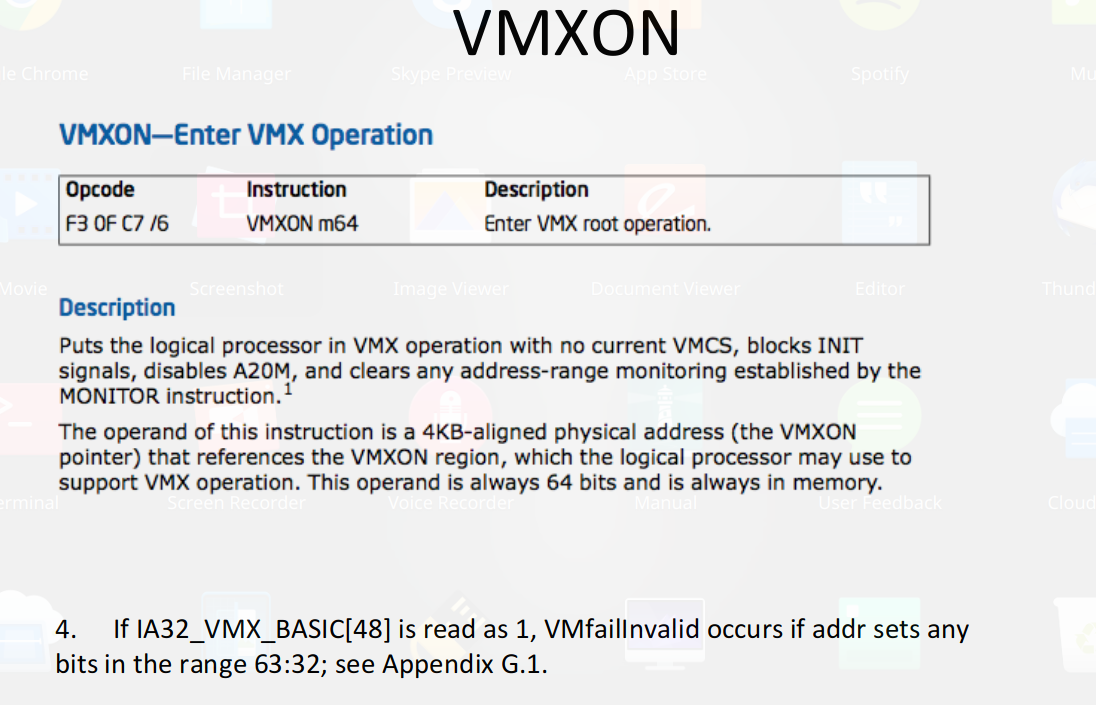

From the above flow we can see that the first step to create a guest vm is to enter in VMXON operation. But before executing vmxon we need to first check for VMX support by processor and do some initialization for vmxon.

Note: I have attached the complete code for this part at the end of the article.

Checking support for VMX

To check whether vmx support is turned on in cpu we need to call cpuid with eax=1 . In response, bit 5 in ecx will show vmx support. Lets create a simple function to check that.

// looking for CPUID.1:ECX.VMX[bit 5] = 1

bool vmxSupport(void)

{

int getVmxSupport, vmxBit;

__asm__("mov $1, %rax");

__asm__("cpuid");

__asm__("mov %%ecx , %0\n\t":"=r" (getVmxSupport));

vmxBit = (getVmxSupport >> 5) & 1;

if (vmxBit == 1){

return true;

}

else {

return false;

}

}

Before entering vmxon we need to set few bits in CRX registers for different purposes. The first bit we need to set is bit 13 in CR4 register.

#define X86_CR4_VMXE_BIT 13 /* enable VMX virtualization */

#define X86_CR4_VMXE _BITUL(X86_CR4_VMXE_BIT)

bool getVmxOperation(void) {

unsigned long cr4;

// setting CR4.VMXE[bit 13] = 1

__asm__ __volatile__("mov %%cr4, %0" : "=r"(cr4) : : "memory");

cr4 |= X86_CR4_VMXE;

__asm__ __volatile__("mov %0, %%cr4" : : "r"(cr4) : "memory");

}

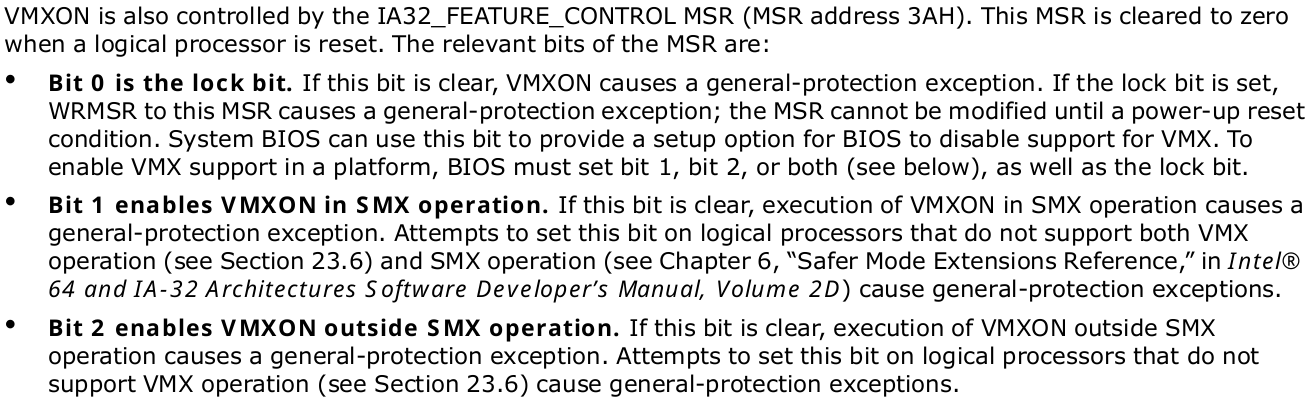

VMXON can also be controlled by IA32_FEATURE_CONTROL in MSR. It maybe disabled which can stop processor from entering vmx mode. We need to extract the value of IA32_FEATURE_CONTROL and set it accordingly to support vmxon.

To execute VMXON we need to set the Bit 0(Lock bit) and Bit 2(VMX outside SMX).

/*

...

#define FEATURE_CONTROL_VMXON_ENABLED_OUTSIDE_SMX (1<<2)

#define FEATURE_CONTROL_LOCKED (1<<0)

#define MSR_IA32_FEATURE_CONTROL 0x0000003a

bool getVmxOperation(void) {

...

...

...

* Configure IA32_FEATURE_CONTROL MSR to allow VMXON:

* Bit 0: Lock bit. If clear, VMXON causes a #GP.

* Bit 2: Enables VMXON outside of SMX operation. If clear, VMXON

* outside of SMX causes a #GP.

*/

required = FEATURE_CONTROL_VMXON_ENABLED_OUTSIDE_SMX;

required |= FEATURE_CONTROL_LOCKED;

feature_control = __rdmsr1(MSR_IA32_FEATURE_CONTROL);

printk(KERN_INFO "RDMS output is %ld", (long)feature_control);

if ((feature_control & required) != required) {

wrmsr(MSR_IA32_FEATURE_CONTROL, feature_control | required, low1);

}

}

I am importing wrmsr from asm/msr.h and implemented __rdmsr1 on my own.

#define EAX_EDX_VAL(val, low, high) ((low) | (high) << 32)

#define EAX_EDX_RET(val, low, high) "=a" (low), "=d" (high)

static inline unsigned long long notrace __rdmsr1(unsigned int msr)

{

DECLARE_ARGS(val, low, high);

asm volatile("1: rdmsr\n"

"2:\n"

_ASM_EXTABLE_HANDLE(1b, 2b, ex_handler_rdmsr_unsafe)

: EAX_EDX_RET(val, low, high) : "c" (msr));

return EAX_EDX_VAL(val, low, high);

}

Final CRx register related changes that we need to perform is to disable the restriction on VMXON.

In simple technical terms, we need to enable MSR_IA32_VMX_CR0_FIXED1 and disable MSR_IA32_VMX_CR0_FIXED0 in CR0. Similarly enable MSR_IA32_VMX_CR4_FIXED1 and disable MSR_IA32_VMX_CR4_FIXED0 in CR4.

...

#define MSR_IA32_VMX_CR0_FIXED0 0x00000486

#define MSR_IA32_VMX_CR0_FIXED1 0x00000487

#define MSR_IA32_VMX_CR4_FIXED0 0x00000488

#define MSR_IA32_VMX_CR4_FIXED1 0x00000489

bool getVmxOperation(void) {

...

...

/*

* Ensure bits in CR0 and CR4 are valid in VMX operation:

* - Bit X is 1 in _FIXED0: bit X is fixed to 1 in CRx.

* - Bit X is 0 in _FIXED1: bit X is fixed to 0 in CRx.

*/

__asm__ __volatile__("mov %%cr0, %0" : "=r"(cr0) : : "memory");

cr0 &= __rdmsr1(MSR_IA32_VMX_CR0_FIXED1);

cr0 |= __rdmsr1(MSR_IA32_VMX_CR0_FIXED0);

__asm__ __volatile__("mov %0, %%cr0" : : "r"(cr0) : "memory");

__asm__ __volatile__("mov %%cr4, %0" : "=r"(cr4) : : "memory");

cr4 &= __rdmsr1(MSR_IA32_VMX_CR4_FIXED1);

cr4 |= __rdmsr1(MSR_IA32_VMX_CR4_FIXED0);

__asm__ __volatile__("mov %0, %%cr4" : : "r"(cr4) : "memory");

}

Now the last step remains before entering in VMX mode is to allocate the 4kb align memory to support vmx operations( also called as VMX region).

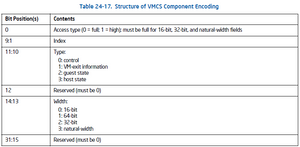

To use this memory as VMX region we need to zero out the memory and need to add VMCS revision identifier into the first 31 bits of the memory buffer.

...

...

#define MSR_IA32_VMX_BASIC 0x00000480

// getting vmcs revision identifier

static inline uint32_t vmcs_revision_id(void)

{

return __rdmsr1(MSR_IA32_VMX_BASIC);

}

...

bool getVmxOperation(void) {

...

...

// allocating 4kib((4096 bytes) of memory for vmxon region

vmxon_region = kzalloc(MYPAGE_SIZE,GFP_KERNEL);

if(vmxon_region==NULL){

printk(KERN_INFO "Error allocating vmxon region\n");

return false;

}

vmxon_phy_region = __pa(vmxon_region);

*(uint32_t *)vmxon_region = vmcs_revision_id();

}

After all these changes we are ready to enter the VMX operation mode.

...

// VMXON instruction - Enter VMX operation

static inline int _vmxon(uint64_t phys)

{

uint8_t ret;

__asm__ __volatile__ ("vmxon %[pa]; setna %[ret]"

: [ret]"=rm"(ret)

: [pa]"m"(phys)

: "cc", "memory");

return ret;

}

...

bool getVmxOperation(void) {

...

...

if (_vmxon(vmxon_phy_region))

return false;

return true;

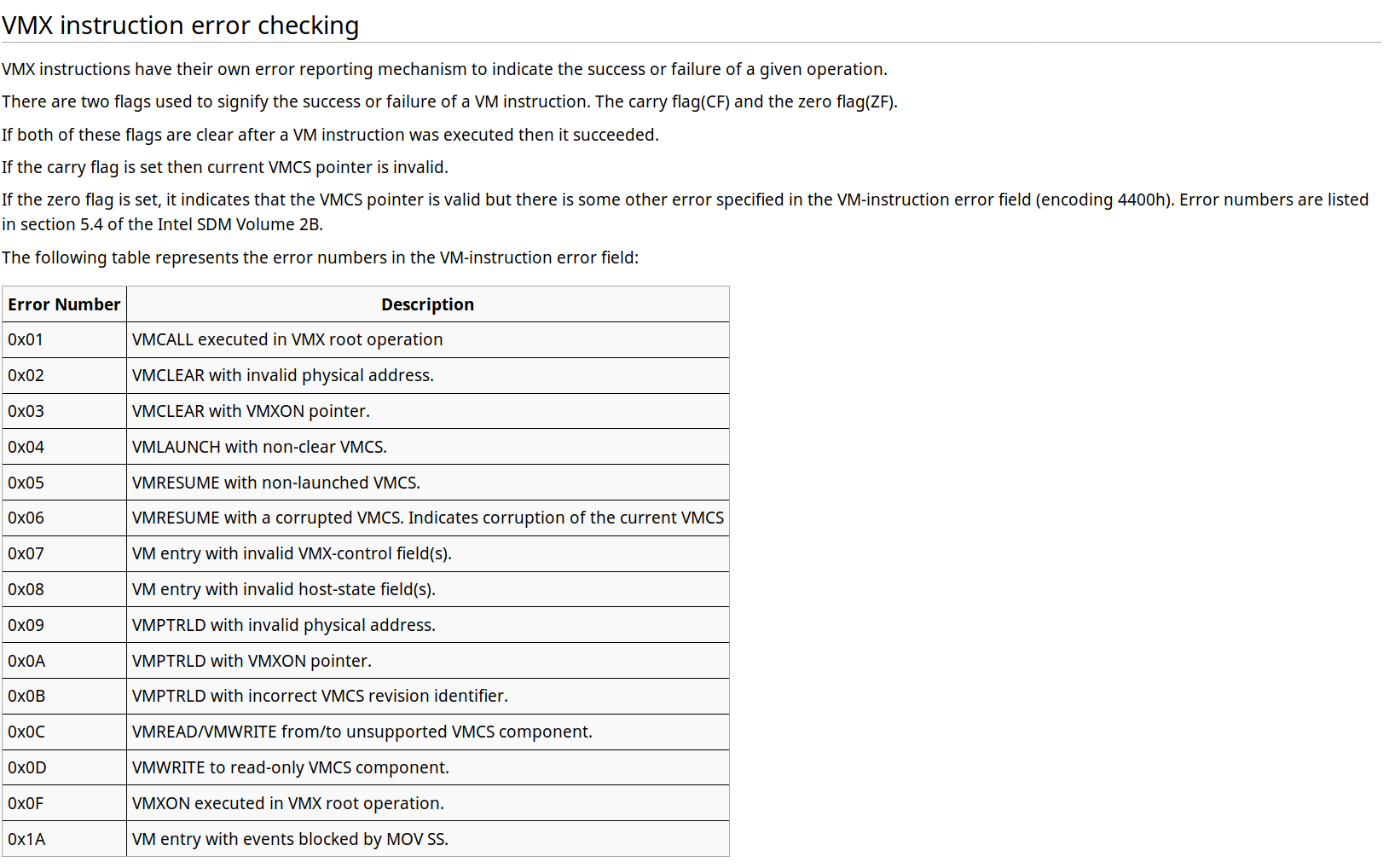

}This will lead us to VMXON operation. If not then you can debug the issue by taking help of setna/setne instructions and below information.

If you are able to executed VMXON successfully then congrats for that otherwise you can refer my code below to check your mistakes.

#include <asm/processor.h>

#include <linux/init.h>

#include <linux/module.h>

#include <linux/const.h>

#include <linux/errno.h>

#include <linux/fs.h> /* Needed for KERN_INFO */

#include <linux/types.h>

#include <linux/errno.h>

#include <linux/fcntl.h>

#include <linux/init.h>

#include <linux/poll.h>

#include <linux/smp.h>

#include <linux/major.h>

#include <linux/fs.h>

#include <linux/device.h>

#include <linux/cpu.h>

#include <linux/notifier.h>

#include <linux/uaccess.h>

#include <linux/kvm.h>

#include <linux/gfp.h>

#include <linux/slab.h>

#include <asm/asm.h>

#include <asm/errno.h>

#include <asm/kvm.h>

#include <asm/cpumask.h>

#include <asm/processor.h>

#define MYPAGE_SIZE 4096

#define X86_CR4_VMXE_BIT 13 /* enable VMX virtualization */

#define X86_CR4_VMXE _BITUL(X86_CR4_VMXE_BIT)

#define FEATURE_CONTROL_VMXON_ENABLED_OUTSIDE_SMX (1<<2)

#define FEATURE_CONTROL_LOCKED (1<<0)

#define MSR_IA32_FEATURE_CONTROL 0x0000003a

#define MSR_IA32_VMX_BASIC 0x00000480

#define MSR_IA32_VMX_CR0_FIXED0 0x00000486

#define MSR_IA32_VMX_CR0_FIXED1 0x00000487

#define MSR_IA32_VMX_CR4_FIXED0 0x00000488

#define MSR_IA32_VMX_CR4_FIXED1 0x00000489

#define EAX_EDX_VAL(val, low, high) ((low) | (high) << 32)

#define EAX_EDX_RET(val, low, high) "=a" (low), "=d" (high)

// CH 30.3, Vol 3

// VMXON instruction - Enter VMX operation

static inline int _vmxon(uint64_t phys)

{

uint8_t ret;

__asm__ __volatile__ ("vmxon %[pa]; setna %[ret]"

: [ret]"=rm"(ret)

: [pa]"m"(phys)

: "cc", "memory");

return ret;

}

static inline unsigned long long notrace __rdmsr1(unsigned int msr)

{

DECLARE_ARGS(val, low, high);

asm volatile("1: rdmsr\n"

"2:\n"

_ASM_EXTABLE_HANDLE(1b, 2b, ex_handler_rdmsr_unsafe)

: EAX_EDX_RET(val, low, high) : "c" (msr));

return EAX_EDX_VAL(val, low, high);

}

// CH 24.2, Vol 3

// getting vmcs revision identifier

static inline uint32_t vmcs_revision_id(void)

{

return __rdmsr1(MSR_IA32_VMX_BASIC);

}

// CH 23.7, Vol 3

// Enter in VMX mode

bool getVmxOperation(void) {

//unsigned long cr0;

unsigned long cr4;

unsigned long cr0;

uint64_t feature_control;

uint64_t required;

long int vmxon_phy_region = 0;

uint64_t *vmxon_region;

u32 low1 = 0;

// setting CR4.VMXE[bit 13] = 1

__asm__ __volatile__("mov %%cr4, %0" : "=r"(cr4) : : "memory");

cr4 |= X86_CR4_VMXE;

__asm__ __volatile__("mov %0, %%cr4" : : "r"(cr4) : "memory");

/*

* Configure IA32_FEATURE_CONTROL MSR to allow VMXON:

* Bit 0: Lock bit. If clear, VMXON causes a #GP.

* Bit 2: Enables VMXON outside of SMX operation. If clear, VMXON

* outside of SMX causes a #GP.

*/

required = FEATURE_CONTROL_VMXON_ENABLED_OUTSIDE_SMX;

required |= FEATURE_CONTROL_LOCKED;

feature_control = __rdmsr1(MSR_IA32_FEATURE_CONTROL);

printk(KERN_INFO "RDMS output is %ld", (long)feature_control);

if ((feature_control & required) != required) {

wrmsr(MSR_IA32_FEATURE_CONTROL, feature_control | required, low1);

}

/*

* Ensure bits in CR0 and CR4 are valid in VMX operation:

* - Bit X is 1 in _FIXED0: bit X is fixed to 1 in CRx.

* - Bit X is 0 in _FIXED1: bit X is fixed to 0 in CRx.

*/

__asm__ __volatile__("mov %%cr0, %0" : "=r"(cr0) : : "memory");

cr0 &= __rdmsr1(MSR_IA32_VMX_CR0_FIXED1);

cr0 |= __rdmsr1(MSR_IA32_VMX_CR0_FIXED0);

__asm__ __volatile__("mov %0, %%cr0" : : "r"(cr0) : "memory");

__asm__ __volatile__("mov %%cr4, %0" : "=r"(cr4) : : "memory");

cr4 &= __rdmsr1(MSR_IA32_VMX_CR4_FIXED1);

cr4 |= __rdmsr1(MSR_IA32_VMX_CR4_FIXED0);

__asm__ __volatile__("mov %0, %%cr4" : : "r"(cr4) : "memory");

// allocating 4kib((4096 bytes) of memory for vmxon region

vmxon_region = kzalloc(MYPAGE_SIZE,GFP_KERNEL);

if(vmxon_region==NULL){

printk(KERN_INFO "Error allocating vmxon region\n");

return false;

}

vmxon_phy_region = __pa(vmxon_region);

*(uint32_t *)vmxon_region = vmcs_revision_id();

if (_vmxon(vmxon_phy_region))

return false;

return true;

}

// CH 23.6, Vol 3

// Checking the support of VMX

bool vmxSupport(void)

{

int getVmxSupport, vmxBit;

__asm__("mov $1, %rax");

__asm__("cpuid");

__asm__("mov %%ecx , %0\n\t":"=r" (getVmxSupport));

vmxBit = (getVmxSupport >> 5) & 1;

if (vmxBit == 1){

return true;

}

else {

return false;

}

return false;

}

int __init start_init(void)

{

if (!vmxSupport()){

printk(KERN_INFO "VMX support not present! EXITING");

return 0;

}

else {

printk(KERN_INFO "VMX support present! CONTINUING");

}

if (!getVmxOperation()) {

printk(KERN_INFO "VMX Operation failed! EXITING");

return 0;

}

else {

printk(KERN_INFO "VMX Operation succeeded! CONTINUING");

}

asm volatile ("vmxoff\n" : : : "cc");

return 0;

}

static void __exit end_exit(void)

{

printk(KERN_INFO "Bye Bye\n");

}

module_init(start_init);

module_exit(end_exit);

MODULE_LICENSE("Dual BSD/GPL");

MODULE_AUTHOR("Shubham Dubey");

MODULE_DESCRIPTION("Lightweight Hypervisior ");

Our first hurdle to enter in VMX mode is solved. Now in next part we will move to further work.

You can see the complete source code here: https://github.com/shubham0d/ProtoVirt.

References:

Advanced x86: Virtualization with Intel VT-x : http://opensecuritytraining.info/AdvancedX86-VTX.html

Linux kernel VMX implementation: https://github.com/torvalds/linux/blob/c6dd78fcb8eefa15dd861889e0f59d301cb5230c/tools/testing/selftests/kvm/lib/x86_64/vmx.c